Let’s imagine you’re teaching someone English and you’re trying to explain a homonym. Bark (the sound a dog makes) and bark (the outer covering of a tree). Or Bat (a flying mammal) and bat (a wooden or metal club used in sports). Or Bank (a financial institution) and bank (the land alongside a body of water).

Oof. That’s a bit hard to explain. The meaning isn’t just derived from the word, but from the words around it. Context provides the meaning.

Now imagine you’re reading customer reviews for an ecommerce company. One customer says, “When I opened the shirt out of the box it had a number of tears in it!” and another customer says, “When I gave this shirt to my sister she was so happy she burst into tears!”

A human would clearly know the difference between these two phrases. We would understand that one is a positive sentiment and one is negative, and that “tear” and “tear” are different in this context.

But when it comes to programmatically analyzing these phrases, it gets a bit trickier. Older natural language processing tools would traditionally treat the keywords, “tear” and “tear” as the same. After all, it’s the same word…

So if we want to leverage machines to effectively analyze text (thousands of customer reviews, for example) we have to solve for this problem where “context provides the meaning.”

That’s where contextual embeddings come in.

What are embeddings?

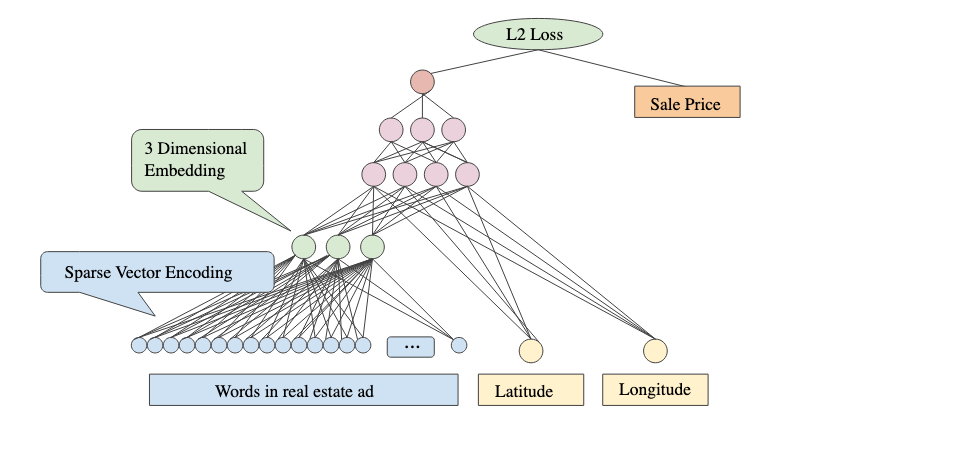

Embeddings are numerical representations of objects, such as words or images, designed to capture their semantic relationships and enable them to be processed by machine learning algorithms.

At CustomerIQ we help teams analyze large bodies of text, so we use text embeddings. Text embeddings, or word embeddings, change words into a numerical form that computers can understand.

But these numbers aren't random.

Instead, they're calculated in a way that keeps similar words close together when you imagine the numbers as points in space. So, words like 'cat' and 'dog' would be closer than 'cat' and 'carpet'.

To get these numerical representations (called vectors), we train a computer program on lots and lots of text. The computer program learns from the text that 'cat' and 'dog' often appear in similar situations, but 'cat' and 'carpet' usually don't.

Embeddings recently evolved

But what about our homonym problem? Cat, carpet, and dog are obviously different words…

Traditional embedding models represent words using a single vector, so all meanings of a homonym are merged into one representation. This might not always capture the nuances when a word has multiple meanings in different contexts.

More recent models, however, have made progress on this issue. Models like BERT (Bidirectional Encoder Representations from Transformers) and its successors like GPT (Generative Pretrained Transformer) pre-create what's known as "contextual embeddings". This means they generate a different vector for a word, sentence, or paragraph based on the context it's used in.

So, in these models, 'bank' would have one vector when used in a financial context (like "I went to the bank to withdraw money"), and a different vector when used in a natural context (like "He sat on the bank of the river").

That brings us to today

Once we have these contextual embeddings for our words, we can use them for different tasks, like figuring out if a movie review is positive or negative, or translating text from one language to another, or grouping them together to identify themes.

Or, in the case of CustomerIQ, we can:

- Discover themes among our customer feedback by clustering feedback that is semantically similar to each other.

- Classify customer feedback into helpful user-defined categories like: pains, preferences, and needs.

- Search across our database of customer feedback for mentions of features, problems, or requests according to the semantic similarity between what customers said and the term we searched

Using contextual embeddings, we can combine human-like semantic understanding with the analysis and speed of machines.

How CustomerIQ uses embeddings to revolutionize qualitative analysis

When you upload feedback submissions to a folder in CustomerIQ, our AI automatically extracts highlights (key points, phrases, or summaries), then converts those highlights into embeddings.

By doing so, we’re able to perform a number of critical functions that were previously limited by a high-degree of effort in human analysis:

- Clustering: We run calculations on a set of embeddings to identify which embeddings are most similar to each other. From this we derive themes across the text. Embeddings allow us to do this with unbelievable speed and accuracy

- Classification: By embedding a series of pre-determined categories (bugs, requests, complaints, for example) we can calculate how semantically similar embeddings are to the categories we’ve created.

- Semantic search: By embedding a search query and comparing it to the embeddings in view, we can rank highlights by those that are most semantically similar. This is especially useful for customer feedback analysis because users and customers don’t always use the same words to describe features and products as their makers do. With semantic search, we can generally identify the topics we’re after without knowing the exact words to describe them.

Looking forward

Using embeddings in content clustering, classification, and search can lead to more accurate, efficient, and interpretable results than what we can produce manually.

This has broader implications than just speeding up our current synthesis projects.

What data could we synthesize that we haven’t considered because the task would be too large?

What sources of feedback contain valuable customer insights waiting to be found?